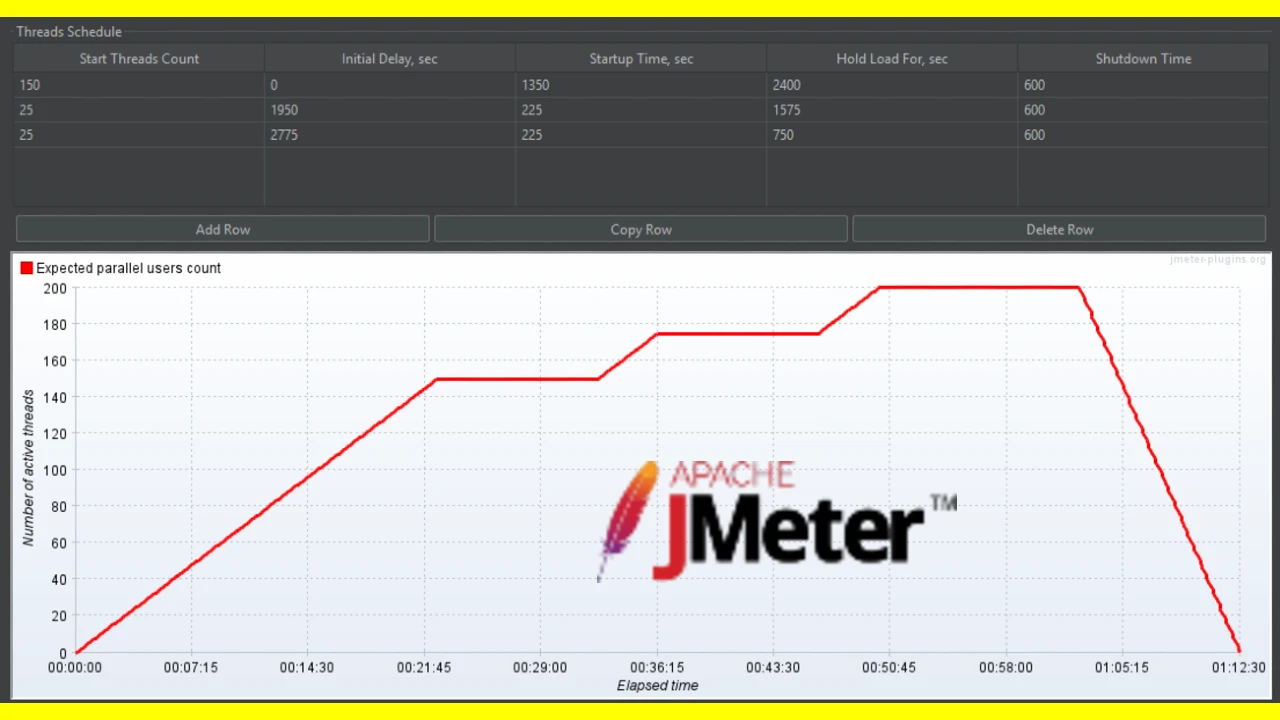

During the execution of the JMeter Stress Performance Test, we successfully simulated API requests over a sustained period. You may check out the test in the Postman-Integration-Jmeter-Stress-test GitHub repository. On the thumbnail image above, you can see the JMeter test results received.

Below, I provide an analysis of the key metrics captured and what they signify:

Key Metrics

- Total Requests: 16,000

- Total Test Duration: 3 minutes and 21 seconds

- Throughput: 79.7 requests per second

- Response Time Averages:

- Minimum: 351 ms

- Maximum: 2,313 ms

- Overall Average: 1,550 ms

Detailed Observations

- Consistent Throughput: The test maintained an average of 79.7 requests per second, peaking at around 89 requests per second during certain phases. This indicates stable performance under load.

- Response Time Trends:

- The average response time varied across phases, starting at 526 ms and increasing as the number of active users scaled.

- The highest average of 1,779 ms occurred when the system handled the maximum concurrent load.

- Concurrency and Load:

- The test gradually scaled up to 160 concurrent threads, simulating high user activity.

- All threads completed successfully without errors, demonstrating system stability.

- Error Rate:

- 0.00% Error Rate: No request failures were recorded, indicating the API handled all requests correctly even under heavy load.

Performance Insights

The test results show that the API can handle 160 concurrent users and maintain a consistent request throughput without errors. However, the response time increased significantly as the load grew, peaking at over 2 seconds.

Recommendations

- Performance Optimization: Investigate potential bottlenecks causing response time spikes when under load.

- Scalability Testing: Increase concurrent threads beyond 160 to test the system’s true scalability limits.

- Resource Allocation: Consider optimizing server resources or caching strategies to handle large traffic loads efficiently.